Are Quantum Computers Energy Hogs?

As many of you know, for the past five years or so, I’ve been following and posting about quantum technologies broadly and quantum computers in particular. When I meet people unfamiliar with the space for the first time, I’m surprised how often their initial reaction is “how are we going to handle the terrible carbon footprint of quantum computers?” Perhaps they are conflating quantum computing with AI models, or perhaps they just assume that the promised awesome capabilities of quantum computers will require huge amounts of energy. So, it may come as a surprise to readers that the energy requirements of quantum computers will pale in comparison the that of AI models or supercomputers.

Let’s unpack that a bit, but first, let’s review how energy consumption is measured. A “watt” is a unit of energy measurement that essentially measures how fast energy is being used. For those of you that are technically minded, a watt is equal to one joule of energy per second, and a joule is roughly equal to the amount of energy it would take to lift a small apple (weighing 100 grams) up by one meter. In terms of electricity usage, a watt is equal to the volts x amps in a circuit, akin to electrical “pressure” and “flow”. However, you don’t need to understand this terminology to appreciate power usage. Here’s some real-world context regarding approximate watt usage:

A small LED light bulb might use 10 watts (or 10W)

A microwave oven might use 1,000 watts (also referred to as a 1 kilowatt or 1 kW)

A large data-center rack or server room might use 1,000,000 watts (also known as a megawatt or 1 MW)

The entire world, roughly on average, uses 1,000,000,000,000 watts (one trillion watts, or a terawatt also written as TW)

Quantum Computer Power Usage

When considering what power needs of quantum computer, there are three primary drivers:

Quantum Processors: Quantum computers use qubits as their primary processing unit, and these qubits are arranged and made to perform quantum calculations through gate mechanisms. This is like what happens in the CPU in a classical computer, but qubits require.

Cooling systems: This is largely unique to superconducting quantum computers, like those used by IBM, Google, Rigetti and IQM among others.

Readout and control: There is added overhead to operate the system, handle error correction and other related computing activities.

The cooling systems are the biggest energy hogs for quantum computers, although not all modalities require cryogenic cooling, and in fact, differing types of quantum computers have differing energy requirements. Cutting to the chase, here is an approximation of energy usage by quantum computer type:

Neutral Atoms - such QuEra’s 256-qubit Aquila: Less than 7,000 watts (7kw)

Trapped Ions – such as Quantinuum’s H2: Approximately 10,000 watts (10kw)

Superconducting – such as IBM’s 127-qubit Eagle or Google’s 53-qubit Sycamore: 10,000 – 25,000 watts (10kw-25kw)

In other words, even today’s most power-hungry quantum computers consume 25 kw of power, roughly equivalent to that of the HVAC system in your office.

Supercomputer Power Usage

Let’s now contrast that with the power usage of some of today’s most powerful classical computers. Here are some metrics regarding three such supercomputers:

Frontier (United States) – Housed at Oak Ridge National Laboratory, Frontier is the world’s first exascale supercomputer, capable of over 1.35 exaflops (more than a billion-billion calculations per second). Built by Hewlett Packard Enterprise using AMD CPUs and GPUs, it consumes about 21 megawatts of power and leads in raw performance, tackling tasks from climate modeling to nuclear physics.

Summit (United States) – Also located at Oak Ridge, Summit was Frontier’s predecessor and the fastest computer in the world from 2018 to 2020, delivering 200 petaflops (a petaflop is one quadrillion operations per second) at peak performance. Powered by IBM Power9 CPUs and NVIDIA Tesla V100 GPUs, Summit consumed around 13 megawatts and enabled breakthroughs in genomics, materials science, and cosmology.

Fugaku (Japan) – Developed by RIKEN and Fujitsu using ARM-based processors, Fugaku topped the global supercomputer rankings from 2020 through 2022. It achieved nearly 442 petaflops on scientific workloads and was designed for versatility, contributing to research in drug discovery, weather forecasting, and pandemic modeling before being surpassed by Frontier. It is estimated to have used several tens of megawatts.

To put this into further perspective, Frontier supercomputer’s 21 megawatts cost more than $23 million per year. That’s just to power it.

As you’ll note, these machines consume orders of magnitude more power than quantum computers, albeit it’s an apples to oranges comparison given that today’s quantum computers can’t calculate much, let along petaflops or exaflops worth of data. However, it’s useful to note that the computational capacity of quantum computers increases exponentially with the number of qubits, thanks to entanglement, while its power usage remains the same or at most, scales linearly. Plus, even if scaling quantum computers require 10x or 100x more energy than they currently use, it would still be substantially less than the usage of classical supercomputers.

Artificial Intelligence (AI) Power Usage

AI workloads represent the fastest-growing component of data center energy demand. AI model training is extremely energy-intensive and inference energy usage, while less intensive than training, adds up quickly with scale. Here are a few examples and metrics for model training:

Llama 3 (Metas 2024 model) training consumed about 581 megawatts, which is roughly the same as what several large industrial facilities such as steel plants would draw.

Training the GPT-3 model required 1,287 megawatts, which is the equivalent of what an average household might require over 120 years.

Training the GPT-4 model took an estimated 40x more power than GPT-4, with estimates ranging from 51,773 megawatts to 62,319 megawatts, using about 25,000 Nvida A100 GPUs over a 90–100-day training period.

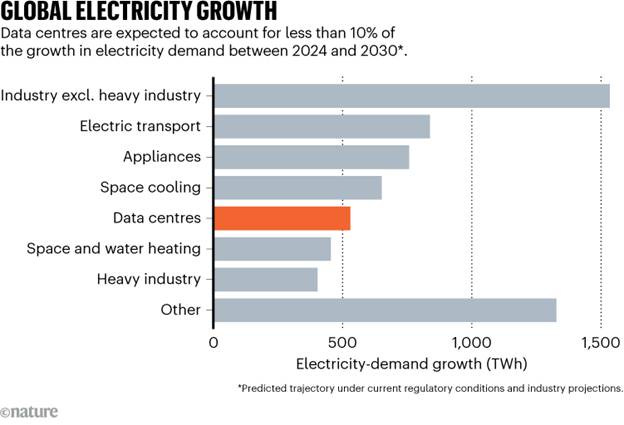

Data centers already consume a large portion of overall energy and it’s expected that their energy requirements will double by 2030 as AI usage continues to grow. The following Nature graphic highlights this metric:

Putting It All Together

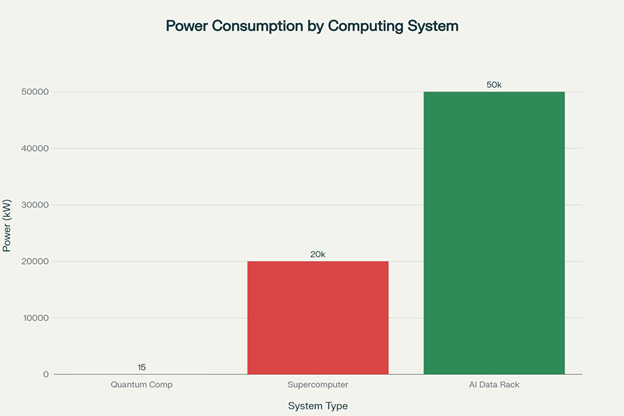

The following chart highlights the relative energy consumption of quantum computing vs supercomputers vs AI data racks and the result will likely surprise you.

As you’ll note, energy demands of quantum computing are so far below that of supercomputers or AI systems that it’s barely on the chart. Even if we are underestimating the eventual power usage of quantum computers with 1,000,000 qubits, a threshold many cite as required for true commercial advantage, by 10x or 100x, the relative size of the bars in this graph will not change much.

So, the carbon footprint of quantum computing is not something that society should be worried about. In fact, just the opposite is true as quantum computers become more powerful and help unlock things like more efficient batteries, improved fertilizer production methods (the current Haber Bosch process is notoriously energy demanding) and optimized logistics routing, all leading to energy consumption improvements. In any case, if you are a quantum computing supporter and someone corners you at a cocktail party assailing the heavy carbon footprint that quantum computers will leave, you can disavow them of that incorrect assumption.

Disclosure: I wrote this article myself and express it as my own opinion. This post should not be used for investment decisions, and the content is for informational purposes only.

Boger, Yuval, “The dual-pronged energy-savings potential of quantum computers,” Data Center Dynamics Industry Views, June 26, 2023

Champion, Zach, “Optimization could cut the carbon footprint of AI training by up to 75%,” University of Michigan Engineering News, as updated January 15, 2025

Chen, Sophia, “Data centres will use twice as much energy by 2030 – driven by AI,” Nature, April 10, 2025

Semba, Kurt, “Artificial Intelligence, Real Consequences: Confronting AI’s Growing Energy Appetite,” Extremenetworks.com, August 15, 2024

Certain metrics and the bar graph courtesy Perplexity AI. (2025, October 21)

We were just talking about this! Great post, highly informative!